- 分清楚投资和投机;投资需要好的买点,投机则买卖点都很重要;千万不要拿着投机标的拿着拿着安慰自己是投资

- 投资需要左侧交易,价格够低就可以入;投机需要右侧交易,趋势改变再操作

- 大部分公司营收都会受到大环境影响,既然个股分析离不开分析宏观经济,不如直接买 VOO,不用分析个股减轻工作量

- 买短期内没有盈利,或者 PE 100 倍以上的个股,无论理由多好都是投机 – 因为这些个股估值回到合理区间需要盈利大幅增长,走错一步就有可能万劫不复,纯纯的赌博

- 大仓位投资,小仓位投机

- 投机不要做日内,被华尔街算法机器人收割;至少要持有若干天,最长可以持有几个月;再久就属于烂手里了

- 投机要注意观察市场情绪,情绪好可以无视一切坏消息,情绪差可以无视一切好消息

- 投资需要在 PE 合理时买入,通常市场情绪很差,买入是非常反人性的操作

- 投资仓位和投机仓位要分离,标的也要分离;不要再投资标的上玩投机,反之亦然,让大脑清醒的意识到是在投资还是投机

- 投机要设好止损点,看错了就要承认不能死鸭子嘴硬

- 投资需要有耐心,努力减少情绪的影响,相信猪不会飞

- 投资加减仓尽量在财报公布以后进行;没有落实到盈利的饼,就永远是个饼;不挣钱的业务永远不是好业务

- 不要赌财报,投机不行,投资更不行

- 投资只允许两种原因减仓:基本面显著变化;需要从股市撤出资金用于其他用途。

How to bypass carrier hotspot(tethering) throttling / limits? (T-Mobile, Verizon, AT&T etc)

Before we start, we need to understand how carrier actually identifies your traffic from tethering. If you google, a lot of people will say carriers use TTL to detect tethering. While this could be true, it doesn’t seem like modifying TTL helps.

It’s actually pretty simple – the phone told the carrier on its own!

How do you know? Just go to whatsmyip.org and check if you IP addresses are the same from the phone and from a device that’s using the hotspot.

You will see that you actually get two different IPs! This means that as you’re tethering, the traffic isn’t sent through the same channel as the traffic on the phone!

As a matter of fact, when you’re tethering, the phone will use a different APN (usually called dun) instead of the regular APN that traffic goes through. It’s super easy for the carrier to then throttle your traffic.

Now we figured out that how carrier detects tethering traffic, let’s figure out how to bypass it.

Option 1: Use a device that doesn’t expose your traffic.

If you have a rooted Android phone, I believe you will be able to modify the iptable to route traffic to the phones “regular” apn. You might be able to find some MIFIs that will do this for you automatically on the market.

However, most of us no longer use rooted phones anymore, and this is basically impossible for iOS either. What do we do?

Option 2: Reroute traffic back to the “regular” link using a proxy.

There is a very interesting app called Every Proxy, which is able to setup a proxy server on the phone. If you point your other device (like a PC/mac) that connects to the hotspot to use the proxy, you will be able to reroute all the tethering traffic back to the phone – which the carrier will no longer be able to identify. For the proxy setup on the PC/mac, just use the system proxy settings for it

Now let’s see what I get:

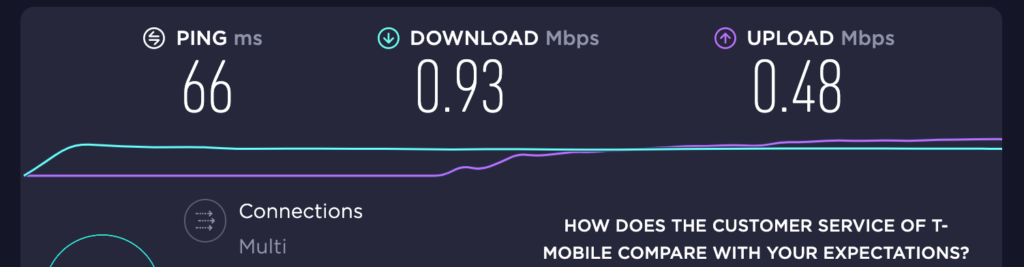

Before:

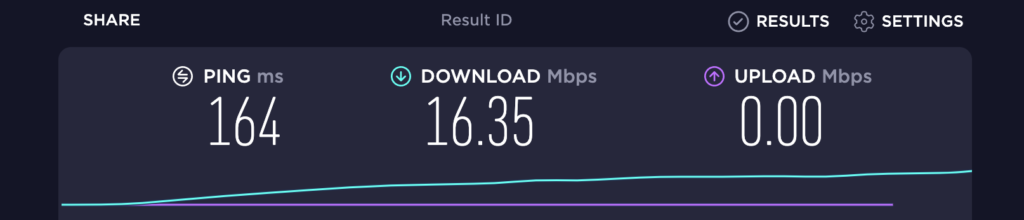

After

A significant improve I’d have to say!

元宇宙的核心,是对下一代个人计算设备的争夺

第一代的个人计算设备,PC,总体而言是一个开放的平台。

第二代的个人计算设备,手机,构筑出了一个新的生态。与此同时软件分发的数字化让苹果和谷歌吃到了大量的平台税,从此赚的盆满钵满。与此同时,苹果对于广告的盈利模式显然是不喜欢的,最根本的原因还是因为可以不用交平台税。于是 Facebook 和 Apple 的梁子算是结下了。本质上还是两种不同商业模式的竞争。

FB是第二代系统的受益者,同时 Apple 的土皇帝地位显然让FB也感到坐立难安。手机这个赛道现在进入也太晚了(更何况FB曾经试图进入过,只不过失败了)。与其在手机上和 Google 以及 Apple 继续纠缠,FB 决定直接跳过手机,进入到FB认定的下一代个人计算平台,也就是VR。

这个决定从商业上来说,是很显然的。在已有的赛道上再折腾也很难折腾出水花了,不如开辟一个新战场。万一对手赌错了,胜算还是很大的。

现在的问题是,蛋糕有多大,以及还要多久才能变成像手机一样的体量?

要解答这个问题,我们首先要看,VR 发展速度太慢的根本原因是什么?

是软件么?很多人说,VR迄今为止没有killer app是导致VR发展缓慢的最主要因素。

而我认为,软硬件是一个相辅相成的过程。VR发展缓慢的核心原因,我认为主要还是在硬件上。当一个VR设备使用45分钟就需要休息一段时间的时候,它想要占据人们的时间还差的太远。

那么VR 硬件发展缓慢的核心原因是什么?在我看来,VR设备和手机设备需要的硬件技术,没有本质的区别。CPU,显示和电池,这些制约手机继续发展的技术,同样也是制约 VR发展的技术壁垒。

所以 Metaverse 的核心,是对更新硬件的跨越式发展的要求。如果 CPU,电池和显示技术停滞不前,VR 发展也很难继续。

所以 All in Metaverse 的意思就是,FB 要开始和 Apple, Intel, Nvidia 抢硬件工程师了。

与此同时,软件也要跟上。虽然我们认为 VR 世代会有新的软件形态,但是这些软件所要解决的基本问题,我认为应该都已经存在了,不管是聊天,购物,还是游戏看剧,基本就在这个框框里。

与其讨论元宇宙,不如考虑一下这些现实的问题

最近元宇宙这个概念风风火火,引发各路资本竞相追逐。可是在我看来,元宇宙和5G有一个通病,那就是现阶段基本属于骗钱,概念超前应用。不过呢,仔细想想这些元宇宙玩家们现在提出的构想以及实现它们所需要解决的技术问题,我倒是提议不如先解决这些更现实一点的问题:

1. 远程办公

如果世界上所有的办公室工作都可以被远程办公所替代,那么航空业将失去巨大的商务客流,酒店业也一样,交通通勤将会大大改善,地区间的不平衡发展也会有所缓和。从各种意义上来说,如果远程办公能够提供和面对面办公一样的效率, 整个社会会节约大量的不必要开支。

远程办公还有不少问题需要解决:最简单的就是网络和语音通信。经过 Zoom 的不懈努力,远程会议的体验已经大大改善,不过还是会经常有卡顿的问题,收音和喇叭也经常出问题。此外一天会议下来对着电脑,眼睛实在是太累;画示意图还是不是那么的方便;人与人之间缺少参与感(aka 面对面可以随时打扰的感觉)。

解决这个问题,可能需要一些硬件的投入和改善,但是总的来说比 VR 可是靠谱多了,而且 market cap实在太大,建议多多考虑。

2. 远程控制

远程控制的大概意思就是让一个人远程控制来操作一个机械。最直观的就是远程控制的代驾,不过开车这玩意太危险,所以更容易的可能是在施工工地上的大型器械。在可控的环境里,这样的控制即使失效也不会太吓人。

随着发达国家的劳动成本越来越高,操纵和驾驶大型器械的员工成本也是越来越高。从某种意义上来说,这是继客服外包,程序员外包后的第三种外包,机械工人外包。

外包最大的问题就是沟通成本,所以最后实际能节省的成本未必可观,但是需要解决的问题可是比元宇宙清晰和明确太多了。

3. 远程医疗

差不多类似于远程控制,不过是远程控制手术器械/检查器械,让医疗条件不发达的地区也可以接受最好医生的治疗。

这件事情的难度因为精准度的要求剧烈升高,但是从公益角度来看,这对于人类社会的意义以及减少地区发展不平衡的意义可是无可估量。同时来自世界各地的病例,也会给前沿医疗研究提供宝贵的数据参数,促进人类医学的进步。

什么才是真正的发展方向?

现在元宇宙吹的满天飞,我建议还是从实际应用出发来构建。先找到一个实际的 VR 虚拟应用,然后在这个应用的基础上构建 Profile,社交以及其他元素。就类似从视频分享为起点,逐渐构建出以视频为核心的社区。

从这个意义上说,微信除了不是 VR 以外,完美符合元宇宙的所有构想。区块链和虚拟货币,除了不是 VR 以外,也够建了一个和现实世界不太相同的虚拟世界银行系统。

About Processes

People who know me know that I have a biased view of processes – and that’s probably because I’m working for Facebook at this moment, and generally speaking larger companies have more processes than smaller ones. While I have a lot of ideas while discussing with my coworkers about processes, I’d like to put down some more structured thoughts in this article.

First of all, what I believe that makes a company / organization / team successful boils down to two very fundamental things:

People, Domain.

- Key people is a multiplier to an organization. They unlock potentials and make impossibles possible. They are people of integrity who would consider faking or even taking shortcuts unacceptable – and they would quit if forced to do so. They don’t blindly follow instructions, and have their own thought of thinking. They have the domain knowledge, and willing to master new domains whenever needed. They ask rest of the org to be the same of them, or at least a portion of them.

- Domain defines how well the people power can be unleashed. Even super talented person cannot fight against trends. A hot domain may make someone less talented to look like they’re super talented. However, it’s worth noting that if you have the right people, talented people can find the right domain (think the shift to mobile and the companies who succeeded and failed)

The rest plays much less critical roles. Even with a bad process, the good ones will still figure out how to navigate it and find the answer, while a good process cannot magically turn something shitty into great.

Steve Jobs had a great comment on process vs content – which I take the content as “the content great people produce”. I can’t agree more with him.

When I think about the greatest CEOs in the tech industry, they all seem to care a lot about details, and they have a lot of thoughts on them. Take Elon Musk as an example, even as busy as an CEO (or CEOs), he still knows how a rocket works in details.

So am I against processes? No. I think they are important, though from a different way.

Processes are there to make the best use of the talented people.

While we want to work with as many talented people as possible, the sad fact is that we only have a few talents. Processes exist because they give them power, or reduce the friction of having the talented people taking care of the rest. Aka, letting the great people to become more efficient in creating content.

Let’s take an example. A team used to have unstructured roadmapping processes. This worked great because we have a great TL who understands product and engineering. This worked until the team started to grow, potentially by 2x or 3x – the TL can no longer cover every roadmapping if nothing changes.

To address this problem, we can create such processes:

- Make sure all the product ideas are vetted and ordered upfront.

- Make sure all product ideas have well-documented plan so that it’s super clear to the TL what needs to be built.

- Have this TL go through the docs and give early feedbacks on each project.

- Have a review session to finalize on the roadmap.

In this process, 1 reduces prevents low quality ideas to go through the roadmapping session. 2 reduces the back & forth between product & engineer, and also pushes product to think through the project in more details. 3 turns the back & forth into an async communications and 4 ensures the “eventual consistency” between product & engineer. This process introduces more work for the product team, but increase the value of time this TL puts into it.

While this example this talent is an engineer, it could be anyone – including yourself. It could also be an important highly productive team in the org.

Processes don’t make sense if you don’t have talents on the team, or they’re not a constraint resource. Processes increase the amount of work for people overall – and only when we can shift some work from the talented to the rest of the team processes start to make sense.

Alright, to end this post, here are my two principles on processes:

- Processes are useless if you don’t have the talented person.

- Built processes for that person so they create more great content.

There will be only one winner in Food, Drink & Local Services

Players in high frequency need can beat low frequency need players extremely easily.

Yelp will definitely agree to this statement – they’re getting killed by Google.

Food is the highest frequency need, then drink, then other local services (grocery, autos, karaoke, kart racing, kayaking, wedding, etc etc).

You will probably know you can also order drink from the app where you ordered food, rather than an app where you ordered grocery delivery, even though the nature of the business are not much that different.

Doordash will almost certain enter the business of grocery delivery, killing instacart and shipt.

Doordash has to face Yelp, if it wants to keep its high evaluation after COVID. Yelp is currently only 2.67B while Doordash is 65B. Buying Yelp will be a smart move that will almost pay back immediately after COVID.

Ride sharing will be the next one. Isn’t it nice if you can send some riders to restaurants, then immediately pick up some food from the very same restaurant (or in the same plaza), deliver it to the residential area, and then getting another ride request from the residential area to the business area?

The list goes on and on. The path is clear, the rest is just execution. Doordash had amazing execution in the past (and strategy as well for sure!) – otherwise they would not have won the delivery war.

可能“恰饭”才是互联网广告的未来?

恰饭,就是大V或者UP主收费发软广。有时候这种软广不是单独发出,而是夹在正常的内容中间,通常伴随着满屏的“防不胜防”的弹幕。

互联网广告的发展,从最开始的牛皮藓广告+弹窗,到后来的信息流广告,广告的样式也变得越来越原生:原因很好理解:原生广告更贴合平台内容,更容易吸引点击。与此同时,广告的定向性也随着效果广告的发展而变得越来越重要。然而定向广告需要收集大量的用户数据,而这个商业模式正在被不同程度的挑战着:

- Apple 举起隐私大旗,Android 厂商将被迫跟随 – 小米是第一个对此下手的 Android 手机厂商,但绝对不会是最后一个。

- 欧洲等国家因为这种商业模式收不到税,开始以各种理由限制对个人数据的收集并加大罚款力度。

- California Props 24 的通过表明普通人的隐私意识已经开始觉醒。

定向广告将会遇到越来越多的困难,与此同时“恰饭”类型的广告,已经开始慢慢崛起:

- 比原生广告更原生,融入内容。很多创作者的恰饭广告质量也很高。

- 用户与创作者的联系让用户甚至很乐于看这样的广告。

- 不依赖于精确的数据收集,但是同时又具备一定的定向性。

主要的问题还是在于广告的门槛变得更高,对于新广告主,试错成本变得很高,而且质量控制困难,scale 也比较困难,如果这些问题能被解决,可能恰饭广告的春天就会来临。写一写我的基本思路:

- 广告主提供模版,创作者只需要按照模版读广告词即可,或者利用 AR 把 UP 主形象放在模板里。

- 创作者预先录制好相同长度内容不同的广告,可以在播出时进行简单的定向投放。

- 中间平台进行广告的质量控制 – 类似于抖音的小范围分发来确定质量 帮助创作者制作出效果好的广告

WSB 是否会引发美股流动性危机?

GME 的盘子并不大,但是如果多头真的死撑着不卖,疯狂增加的空头保证金可能会让基金和做市商不得不出售其它股票以回补,锁定大量的流动性,而其它基金有可能会看到这一点,对大盘股进行做空,继而再被 WSB 的散户继续盯上,引发全局的流动性危机?

当然监管机构肯定会在这之前紧急介入,然而时间点会非常微妙:介入早了,还没有形成系统性风险之前,缺乏正义性;介入晚了,有可能没有办法止住趋势。这届事情的最终结束,会以多头产生系统性风险的道德谴责选择平仓而结束吗?

WSB 大战空头的另一个视角

With great power comes great responsibility.

能力越大,责任越大。

最近落下帷幕的新总统政权交接,最终以特朗普被广大互联网平台联合封杀而首场。Facebook 在这场剧里的角色,显然是要逊于 Twitter 一些的。甚至从更广义的角度上来说,Twitter 一直都是特朗普故事里的主角,从一开始到最后。

再往前追述,更有意思的事情,大概就是 Tiktok 上的年轻人让特朗普的 Rally 空无一人。

而最近,WSB 上的事情,我更愿意称之为 Reddit 的胜利。显然这带来了大量的流量,但是我看到的,是 Reddit 所汇聚的人的力量。

汇聚人的力量。

这件事情似乎已经很久没有在 Facebook 上出现了。再往前推,可以推到俄国政府利用 Facebook 干预大选。虽然可能确实有一些影响,但我很难相信这些影响左右了最终的大选结果,毕竟即使是在2020年,特朗普的得票率也还是历史第二。

再往前走,我依稀记得 Facebook 似乎在阿拉伯之春中扮演了很重要的角色。

可是今天,我似乎已经完全不记得 Facebook 在过去几年中,到底汇聚了什么人的力量,引领了什么样的潮流,又或者是创造了新的焦点。

帝国的衰微,也许就始于此。

2021年最稳的投资:TSM

稳 = 最低风险跑赢大盘

为什么看好TSM?

- 苹果源源不断的钞票喂养,确保了 TSM 在研发上的投入以及先进制程上的领先地位。最近苹果新 Mac 的传言中,苹果取消了之前过于激进的全 USBC 设计,摆明了准备要“扩圈”。Mac 实现对 PC 的蚕食也未必不可能,这些对 TSM 都是利好。

- 自动驾驶会给人类社会带来互联网普及以来的最大变革,而这一切都需要强大的算力来进行保证。目前来看特斯拉的那个100多T算力的芯片,对于高级别的自动驾驶可能还是会力不从心。蔚来已经开启了自动驾驶领域的军备竞赛,各家新能源汽车都会被迫加入这场战争。无人驾驶需要的算力将会是海量的,而造车势力不管哪家胜出,TSM 都坐收渔利。

台积电接下来的路线应该是非常明确了:

最先进制程:供给苹果手机和Mac,少量留给高通/三星厂商

次先进制程:供给AMD MTK 高通

再次制程:无人驾驶芯片

其中产线的成本早在第一段就被消化不少,第二段完全消化,而第三段则属于纯收入。

三星目前不管是制程还是成本,都与台积电有差距,能接到订单的唯一原因可能就是还有产能。毕竟三星的 5nm 再差也还是台积电 10nm 的水准。

台积电的股价,将会随着新造车势力在无人驾驶上的军备竞赛,而越走越高。